Do you dream of using WeBWorK to teach students Python? If so then you have very specific dreams. After the jump I will talk about a new set of macros recently created for use in CS courses at WCU. Rather than creating an automated program tester, of which there are many, these macros are really aimed at filling the gap between labs and projects that we have in our introductory computer science courses.

Introduction

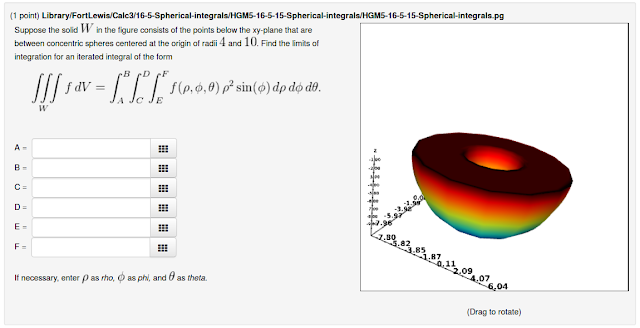

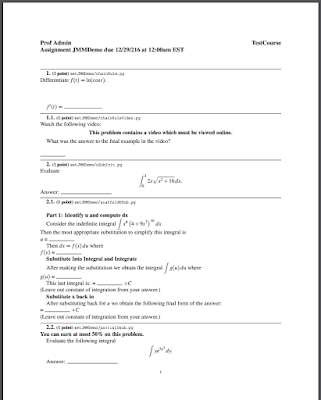

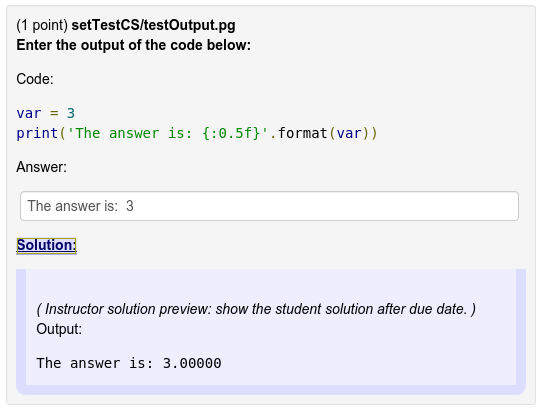

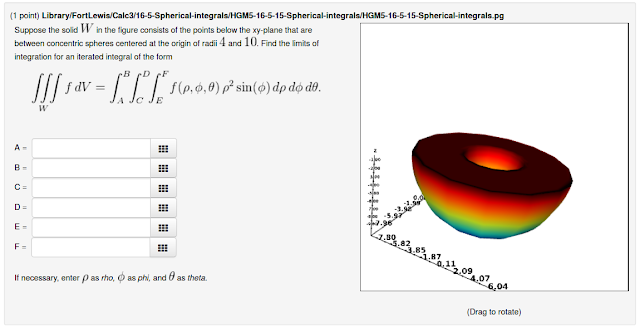

In our introductory computer science courses there seems to be something of a gap between lecture and labs, where students are introduced to new features and concepts in Python, and class projects, which are larger relatively involved programs meant to test and expand students knowledge of the concepts. Outside of weekly labs there isn't any opportunity for students to practice basic syntax and concepts, however. Our goal is to create weekly homework assignments which will help students improve their fundamentals. Of course we want to bring along the instantaneous grading and feedback that are a hallmark of WeBWorK. To accomplish this we have created a collection of PG macros. The macros provide access to two main types objects, the first is the PythonOutput object. The pg file will include a short Python script that will be used to create the object. The code is then run and the standard output of the script will be the correct answer for the problem. The basic idea is that you will present the code to students and ask them what the output will be. For example:

This problem is testing if the student understands the formatting syntax for Python strings. The students answer will be compared exactly, using none of the smart comparisons you might usually expect from WeBWorK. (Of course students could just run the code and check the output, but that is not the absolute worst learning outcome.)

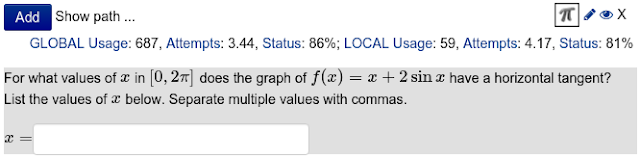

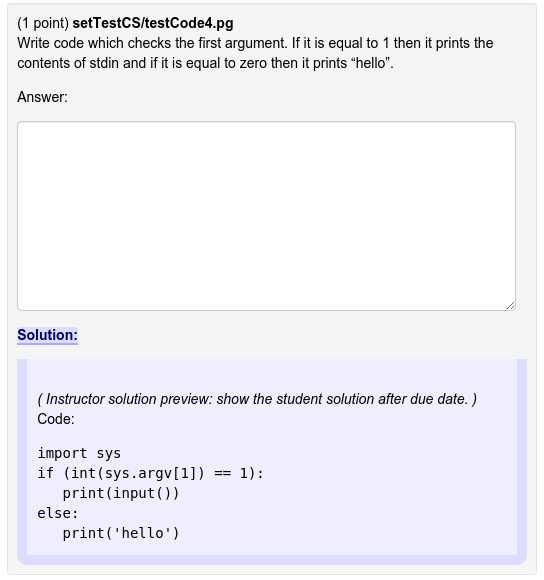

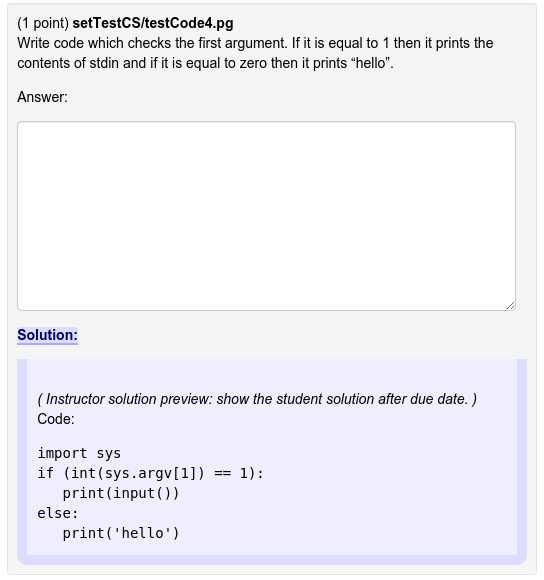

The second type of object is a PythonCode. The pg file will include a short Python script that is used to create the object, just as before. However, in this case the student's will provide their own python script. The output of the student's Python script will be compared to the output of the "correct" Python script. The basic template for this kind of problem is that students will be provided with a description of what their code should do and will need to write a script to fulfil that description.

This problem asks students to create a script which reads the first argument provided to the script, and if it is equal to 1 then print out the contents of the standard input, and print "hello" otherwise. (You can specify the arguments and contents of stdin in the pg file; more on that later.) These types of problems will probably work better if students are asked to write relatively short scripts, however there is support for running fairly complicated scripts including running multiple test cases.

Of course, since you are running code provided by students via a web browser, security is going to be a concern. All Python code evaluated using this system is run in a code jail based on the EDX code jail. In particular the code is run as a separate user using a specially cordoned off python executable. Using AppArmor the python executable (python3 in our case) is only allowed to access the python libraries in the sand box and temporary files created by the jailing code. Because the enforcement happens at the kernel level via AppArmor, the system is reasonably secure

Object Methods

Lets take a closer look at the methods available to these objects and then we will do a deeper dive in actually coding problems.

PythonCode() and PythonObject()For both objects these constructor methods takes the Python code as a string input and returns the object. Generally you would generally do something like:

$python = PythonCode(<<EOP);

for i in range(0,10):

print("The number is {}".format(i))

EOP

code()This either sets or returns the code used to run the object.

options()This method takes in various options which can be used to provide the code things like standard input, command line arguments, and even files with text. The possibilities are:

files=>[['file_name','content'],['file_name_2','other content']] - This is a list (reference) of (references to) pairs, each pair is a filename and a bytestring of contents to write into that file. These files will be created in a jailed tmp directory and cleaned up automatically. No subdirectories are supported in the filename. The files will be available to the jailed code in its current directory.argv=>['arg1','arg2'] - This is an array ref of command-line arguments which will be provided to the code.stdin=>"This string \n will be \n in stdin." - This is a string and will be provided to the code through the stdin channel.

tests() (For PythonCode objects only)This is used to provide the code with a (ref) list of hash references each containing one or more entries for "argv", "files", or "stdin" with the format described above. The correct code and the student code will be run once for each set of inputs and the output of the correct code and the student code will be compared. A correct answer is when the student code output matches the correct output in all of the test cases.

error() (For PythonObject objects only)This will return the error type (e.g. "TypeError" or "SyntaxError") for the Python code, if there is one. This overrides the standard output as the correct answer, if there is an error.

evaluate() This runs the jailed code. It returns the status of the jailed code. The stdout and stderr of the code are stored in the stdout and stderr attributes.

status() This returns the status of evaluated code.

stdout() This returns the stdout output of evaluated code.

stderr() This returns the stderr output of evaluated code.

cmp() This returns a comparator for the object. For PythonOutput the students answer is compared to the stdout of the code. If there is a runtime error then the correct answer is the class of the error (i.e SyntaxError). For PythonCode the students answer is run as python code and the two outputs are compared for equality. If there is data for multiple collections of test input, then the outputs will be compared for all of the collections.

Examples

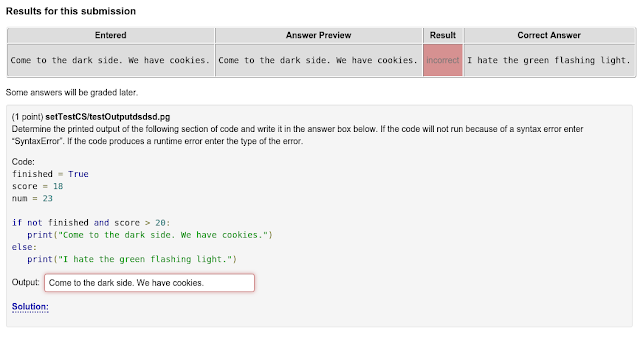

Lets take a look at a couple of examples in greater depth. The following code is for a problem which tests if students can parse the logic of an if statement.

DOCUMENT();

# We use PGML for these problems because it provides code and

# preformatted environments that are useful for presenting code and

# output. We also include the WCUCSmacros file.

loadMacros(

"PGstandard.pl",

"PGML.pl",

"WCUCSmacros.pl"

);

TEXT(beginproblem());

$val = random(1,50);

$val2 = random(1,50);

while($val == $val2) { $val2 = random(1,255); }

# There are an assortment of helper functions in WCUMacros

# which can provide random text strings and variable names.

$stringt = random_phrase();

$stringf = random_phrase();

while($stringt eq $stringf) {$stringf = random_phrase(); }

# We define the actual python object, with the code here.

# Notice the correct Python formatting and the Perl

# interpolated values.

$code = PythonOutput(<<EOS);

finished = True

score = $val

num = $val2

if not finished and score > 20:

print("$stringt")

else:

print("$stringf")

EOS

# Here we actually evaluate the code. You if you skip this step

# the stdout attribute won't be populated. It is done manually so

# that you can set options before it is run.

$code->evaluate();

# Here we have the text of the problem. Notice the code wrapped in

# ``` and the answer blank definition with the comparator.

BEGIN_PGML

Determine the printed output of the following section of code and

write it in the answer box below. If the code will not run because

of a syntax error enter "SyntaxError". If the code produces a runtime

error enter the type of the error.

Code:

```[@ $code->code @]```

Output: [____]{$code->cmp}{50}

END_PGML

# Here we have the solution. The ": " makes preformatted text.

BEGIN_PGML_SOLUTION

The correct output is:

: [@ $code->stdout @]

END_PGML_SOLUTION

ENDDOCUMENT();

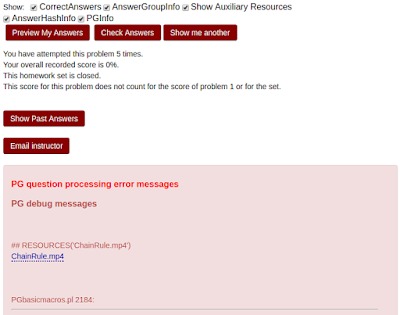

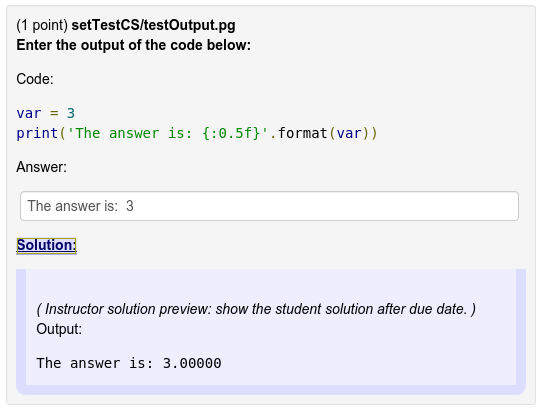

When the code is rendered it looks like the following. Here we have provided an incorrect answer and asked the correct answer to be displayed.

It is useful to note that the numerical value for

score can end up being bigger or smaller than 20. So, assuming we had set

finished = True, we could have had the result of the conditional depend on the problem seed, which is a good thing. What is more, because the answer is determined by the Python code itself, all of the quirks of the language will be faithfully recreated.

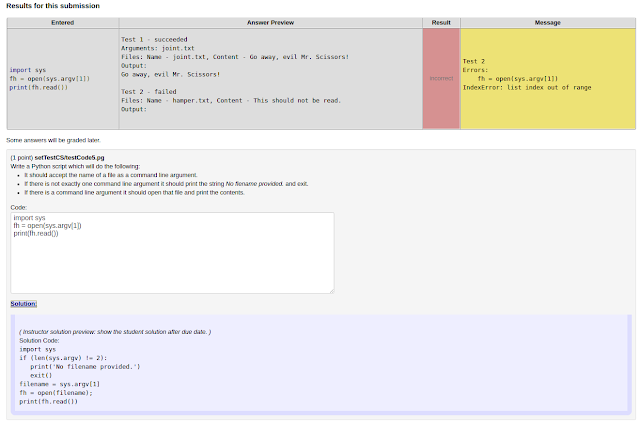

Next lets look at a more complicated example where students are asked to write a program. In particular we are going to ask students to read in a file name from the first argument, open the file and print its contents to stdout. The pg code looks like this:

DOCUMENT();

loadMacros(

"PGstandard.pl",

"PGML.pl",

"WCUCSmacros.pl",

);

TEXT(beginproblem());

# Here is our "correct" Python code. (We do not actually have to

# evaluate the code because we are using test cases.)

$code = PythonCode(<<EOS);

import sys

if (len(sys.argv) != 2):

print('No filename provided.')

exit()

filename = sys.argv[1]

fh = open(filename);

print(fh.read())

EOS

# We can use the random_word macro to come up with random words

# for variable and file names.

$filename = random_word().'.txt';

# This is where we populate the input data for our tests. We have

# two tests. One has an argument with the file name, an a file

# with that name and a random phrase.

$code->tests({argv => [$filename], files=>[[$filename,random_phrase()]]},

# The second test has a file, but no argument. In this case the script will

# exit silently.

{files=>[[random_word().'.txt','This should not be read.']]});

# Here is the PGML for the problem text. Notice the markdown style text

# text formatting.

BEGIN_PGML

Write a Python script which will do the following:

* It should accept the name of a file as a command line argument.

* If there is not exactly one command line argument it should print the string

_No flename provided._ and exit.

* If there is a command line argument it should open that file and print the contents.

Code:

[_______]*{$code->cmp}

END_PGML

# We can also provide our code as a solution.

BEGIN_PGML_SOLUTION

Solution Code:

```[@ $code->code @]```

END_PGML_SOLUTION

ENDDOCUMENT();

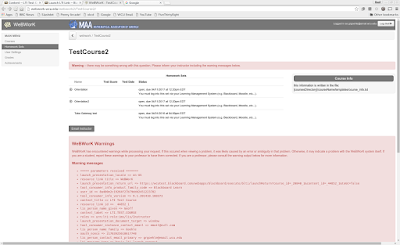

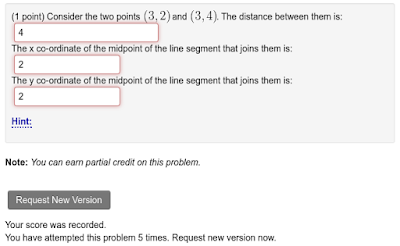

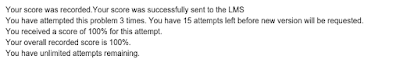

Now lets take a look at what this looks like when run. In the following we have an example of a student submitting a script that opens the file and prints it, but does not check the number of arguments first.

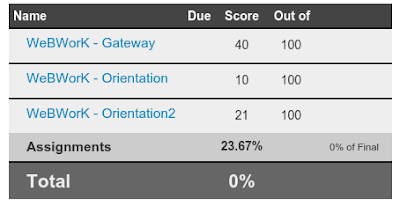

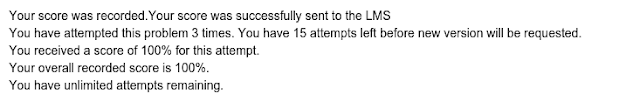

Notice that students are shown the results of each of their tests, as well as any errors that were reported during any tests. The "show correct answer" output shows the correct output, and the correct code is contained in the popover for the correct answer. In addition the system will use pylint to comment on their syntax and formatting. When it finds errors it will post them in a comment box like the one below.

These features represent the basic foundation of our python macros. As we actually write problems and use them in class I'm sure we will come up with new features and best practices. Check back in a couple of semesters to see how things have progressed.